Are Messages From Robots Trustworthy?

From Nike and Google to Coca-Cola and McDonald’s, major brands are incorporating artificial intelligence (AI) into their advertising campaigns. But how do consumers feel about robots generating emotionally charged marketing content? That’s the question a New York Institute of Technology professor raises in a new Journal of Business Research study.

Whereas predictive AI allows marketers to forecast consumer behavior, generative AI enables them to produce novel content, including text, images, videos, or audio. For example, a recent AI-generated Toys“R”Us commercial featured a video of the company’s founder as a boy alongside its brand mascot Geoffrey the Giraffe. While many brands have trumpeted their AI-driven campaigns as a mark of innovation, others may fail to disclose AI use, leading to ethical concerns and calls for government regulation. However, even transparent brands receive backlash, as Google experienced when viewers were offended by its “Dear Sydney” ad, in which a father uses AI to help his daughter draft a fan letter to her favorite Olympic athlete.

“AI is a new territory for brand marketers, but what we do know is that consumers highly value authentic interactions with brands,” says the study’s lead author Colleen Kirk, D.P.S., professor of marketing and management. “Although more companies are now using AI-generated content to strengthen brand engagement and attachment, no study has explored how consumers view the authenticity of textual content that was created by a robot.”

Kirk and her study co-author, Julian Givi, Ph.D., a marketing researcher and faculty member at West Virginia University, completed various experiments to see how consumers react when emotional messages are written by AI. They hypothesized that consumers would view emotionally charged AI-generated content less favorably, impacting their perception of the brand and desire to interact with it.

In one scenario, participants imagined receiving a heartfelt message from a fitness salesperson who helped them buy a new set of weights. The message stated that he was inspired by the consumer’s purchase, with some participants believing it was AI-generated and others believing that the salesman drafted it himself (control group). While the members of the control group responded favorably, those in the AI group felt that the note violated their moral principles (moral disgust). As a result, this group was also unlikely to recommend the store to others and more likely to switch brands when making future purchases. Many even gave the store poor ratings on a simulated reviews site.

Other scenarios also revealed key findings in support of the researchers’ hypothesis:

- The negative effect diminishes when communications are factual, or AI is only used for editing.

- AI-generated messages in which the robot had self-autonomy (for example, an AI-generated memo signed by a chatbot) were viewed more favorably than AI-generated messages signed by a company representative.

- When participants believed that most emotional marketing communications were written by AI, they expressed disgust. The reverse was true when they believed most communications were written by a human. Therefore, brands may benefit from promoting the human origins of their products and communications.

- Human communicators (vs. AI) faced a greater “authenticity penalty” for copying emotional content.

In short, the findings suggest that companies must carefully consider how to disclose AI-authored communications, always prioritizing authenticity in their interactions with consumers. As governments seek to increasingly regulate AI disclosure, making consumers more aware of how brands craft their messages, Kirk says marketers will want to pay close attention to the study’s findings.

“Consumers are becoming ever more skeptical of the human origin of marketing communications. Our research provides much-needed insight into how using AI to generate emotional content could negatively impact brands’ perceptions and, in turn, the consumer relationships that support their bottom lines. While AI tools offer marketers a new frontier, these professionals should bear in mind a time-tested principle: authenticity is always best,” she says.

More News

Additional Alumni Named to Board of Trustees

Two New York Institute of Technology alumni have been named to the Board of Trustees, the most recent alumni to join the university’s governing board.

Intern Insight: Khushi Vasoya

Fashion and jewelry enthusiast Khushi Vasoya bridged that passion with her studies in business administration and finance while interning with LabGrown Box.

Intern Insight: Hardik Hardik

As a business technology intern, M.B.A. student Hardik Hardik worked closely with mass transit and security equipment manufacturer Boyce Technologies’ production and quality teams to support daily manufacturing operations.

Brands Must Balance AI and Authenticity

As consumer behavior expert Colleen Kirk, D.P.S., explains, in 2026, marketers leveraging AI must remember to prioritize genuine connections and transparency.

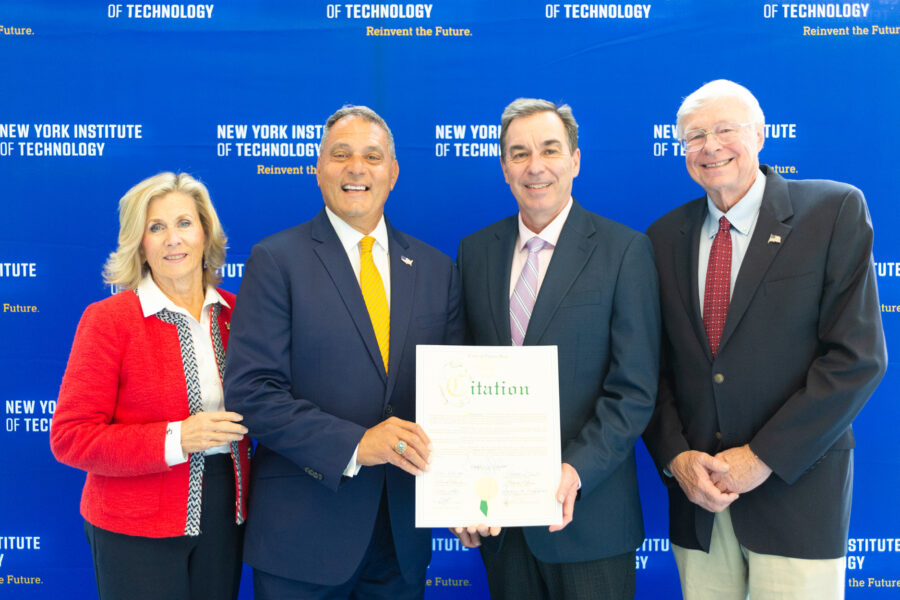

Startup Tech Central Officially Opens

Members of New York Tech and the local community gathered to celebrate the grand opening of Startup Tech Central, a resource for students to transform ideas into real-world impact.

Bridging Business Knowledge With AI

The School of Management has embraced and integrated AI into its courses, focusing on teaching students how to leverage technology to help achieve their goals in the classroom and beyond.